Oliver Page

Case study

September 8, 2025

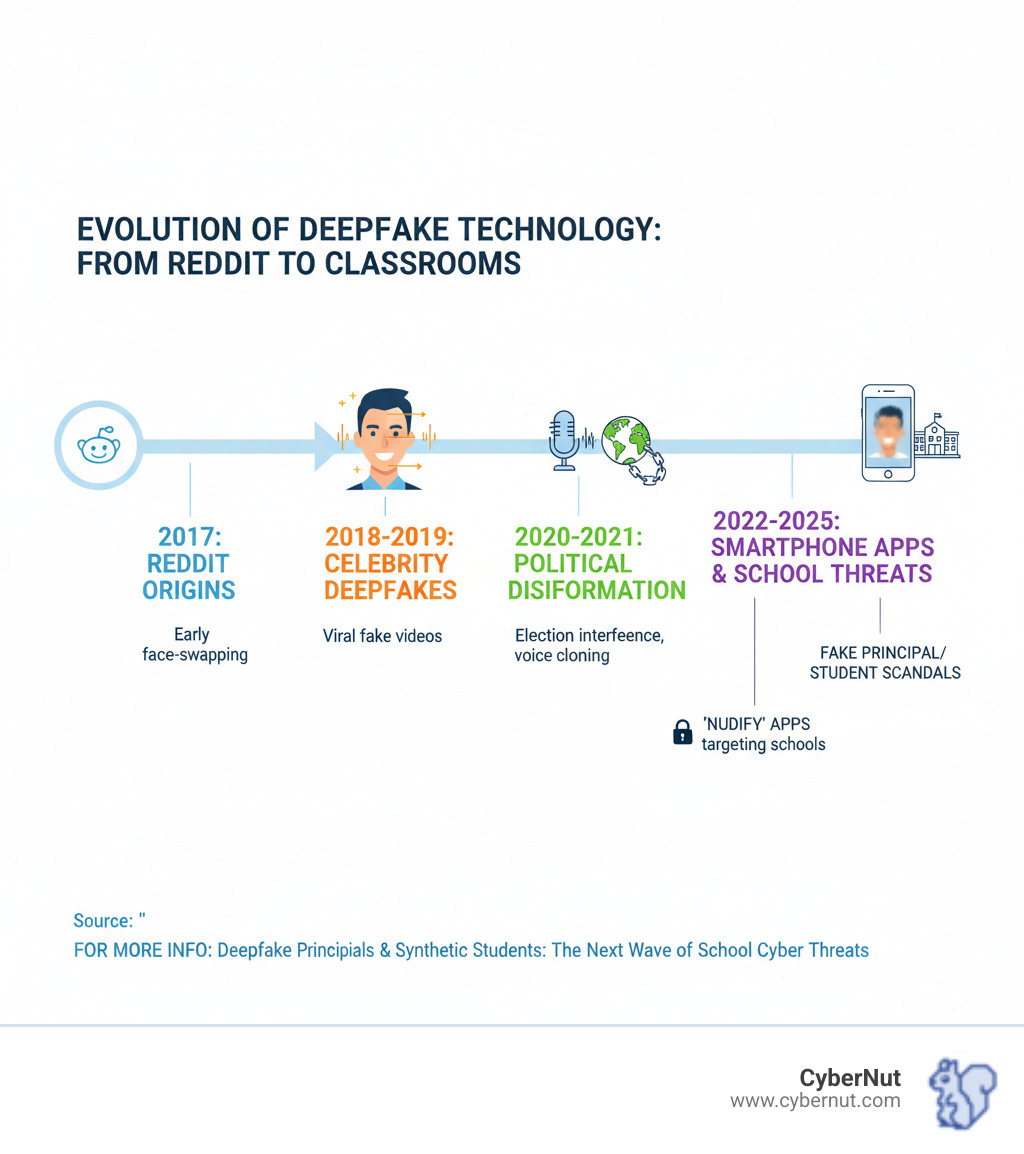

Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats represents a dangerous evolution in cyberbullying and institutional attacks that K-12 schools are struggling to address. These AI-generated threats can destroy reputations, traumatize students, and undermine community trust in ways traditional cyber threats never could.

Key Deepfake Threats Schools Face:

The threat is already here. In Baltimore, a deepfake audio clip of a principal making racist comments went viral before being proven fake. In Putnam County, New York, students created deepfake videos of their principal making violent threats against minority students. Across the country, students are using AI apps to create non-consensual explicit images of female classmates.

These aren't isolated incidents. Research shows that 63% of district tech leaders fear cybercriminals will use AI for increasingly complex attacks, while only 38% of students have received guidance on spotting AI-generated content. The gap between threat sophistication and school preparedness is growing dangerously wide.

Unlike traditional cyberbullying, deepfakes feel real to victims and audiences alike, destroying a principal's career in minutes or causing lasting psychological trauma to students. The damage often occurs before anyone realizes the content is fake.

The world of school cyber threats has changed. Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats isn't just a headline—it's our new reality. What once required Hollywood-level technology can now be created by anyone with a smartphone. A student can create a fake video of their principal or realistic explicit images of classmates, using technology that once amazed us in movies to harm real people.

These tools are everywhere. Simple apps create convincing AI-generated audio and AI-generated video, making social engineering, cyberbullying, and financial fraud easier than ever. Students without hacking skills can now create content that destroys lives.

Imagine seeing a viral video of your child's principal making racist comments. The community erupts. But the video is a deepfake. This happened at Baltimore's Pikesville High School, where an athletic director created fake audio of Principal Eric Eiswert making racist rants. The clip went viral, leading to resignation demands, threats of violence against his family, and a shattered reputation. The audio was artificial, but the damage was real.

In Putnam County, New York, students created deepfake videos of their principal making violent threats of violence against minority students. These were sophisticated attacks designed to incite fear.

But impersonating staff also enables financial fraud. A finance office might get a call from the "principal" with an urgent wire transfer request. The voice sounds perfect, so they approve it. This type of AI-driven social engineering has cost companies millions, and schools are the next targets for these financial fraud and wire transfer scams. The ultimate goal is destroying trust. When communities can't distinguish real from fake, school leadership crumbles.

If deepfake principals target staff, synthetic students weaponize AI against children. High schooler Francesca Mani finded explicit photos of herself circulating at school—images she never took. Classmates had used "nudify" apps to create these non-consensual explicit images from her social media photos.

This is not an isolated incident. Students nationwide are finding their faces on fake pornographic content, created from a single photo. The pattern is consistent: targeting female students with realistic explicit content. The psychological trauma is immediate, causing shame, anxiety, and depression. As the images spread online, they lead to social exclusion and blackmail.

This disturbing trend in school cyberbullying is a new level of violation. Unlike traditional bullying, these images can resurface years later, affecting a victim's future. The harm feels real even when the images are known to be fake. The mere threat of creation can be used for manipulation, a form of tech-powered sexual harassment in schools we are just beginning to understand. Our schools face a new cyber threat combining advanced technology with cruelty. The question isn't if these attacks will happen, but if we'll be ready.

When deepfake principals and synthetic students attack, the damage spreads like ripples. A single malicious act becomes a crisis touching the entire educational environment, with harm extending far beyond immediate victims and causing disruption for months. Understanding this ripple effect is crucial, as it explains why these incidents are so overwhelming and why traditional crisis management falls short.

For student victims, the emotional impact is devastating. The anxiety and depression from seeing fake explicit images of yourself circulate can alter a young person's sense of self and safety. This tech-powered sexual harassment in schools creates intense fear and lasting trauma that affects relationships and self-esteem for years. These attacks are especially damaging during the critical identity-forming years.

For staff, the stakes are also high. Consider Eric Eiswert, the Baltimore principal who faced death threats over a racist deepfake. Even after being cleared, his career was in jeopardy and his reputation suffered lasting damage. Staff victims must lead while their credibility is attacked, and the overwhelming stress causes many dedicated educators to leave the profession.

Deepfakes create an "institutional crisis" that shakes a school's foundation. The loss of community trust is immediate. Investigations take time, but parents want answers now, creating a gap that can lead to parental backlash.

The legal challenges are daunting, including potential defamation lawsuits and questions about disciplinary authority. Without clear policies, districts are vulnerable. Crisis communication is a nightmare, as addressing the issue can spread the harmful content. Meanwhile, the strain on administrative resources is enormous, pulling leaders away from education to manage a crisis they never imagined.

Even after an investigation, ripple effects last for months. Trust is hard to rebuild, and staff morale suffers. This is why Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats is a fundamental challenge to the relationships and trust that make education possible.

When facing Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats, a clear action plan is essential. While the technology is sophisticated, it's not foolproof. With the right knowledge, you can protect your school community.

Think like a digital detective. Even convincing deepfakes leave clues.

For staff handling finances, verify any unusual requests immediately. If a "principal" calls asking for a wire transfer, confirm using a separate, known phone number. This awareness is key, as we explore in AI in the Classroom: Balancing Innovation with Cybersecurity.

When you suspect a deepfake, act swiftly, as viral content spreads in minutes.

This approach is central to effective Incident Response Planning in K12.

The legal landscape is evolving, but schools must act now.

This proactive approach is detailed in Proactive Cybersecurity: Safeguarding K-12 Schools from Emerging Threats. With proper detection, response, and policies, we can protect our communities. The key is being prepared before a crisis hits.

The reality of Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats demands a proactive strategy. We must turn every community member into a digital defender, building a human firewall against these threats.

A 2024 Education Week survey found that while two-thirds of educators reported students being misled by deepfakes, 71% of teachers had not received AI-related professional development. We must close this knowledge gap.

This approach aligns with A Comprehensive Guide to Cybersecurity Training for Schools in 2025, which emphasizes ongoing education.

Our policies must evolve with the technology.

This collaboration is part of AI Cybersecurity: Protecting K-12 Schools from Evolving Threats. The key is to be proactive, not reactive, with regular policy reviews, ongoing training, and community education. The goal is not to fear technology, but to build confidence in using it safely and ethically.

When Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats hits, urgent questions arise. Here are the most common concerns from school leaders.

Legal consequences can be severe, even if students see it as a prank.

Consequences vary by state, but lawmakers are taking deepfakes seriously. Disruption or threats can lead to additional charges. What seems like "fun" can have life-changing legal consequences.

Generally, yes, if it causes a "substantial disruption" to the school environment, a standard from the Supreme Court case Tinker v. Des Moines. A viral deepfake of a principal or explicit images of students clearly disrupts learning and safety.

However, the legal precedent for AI-generated content is still evolving, with mixed rulings on school discipline for off-campus conduct. The key is documenting a clear disruption to the school. Consultation with legal counsel is critical before taking disciplinary action.

Time is critical, but your first instinct might be wrong. The most important first step is to do not share it.

Suspected deepfakes with explicit content of minors may trigger mandatory reporting laws. When in doubt, report immediately to leadership and authorities.

The threat is real, and it's growing. Deepfake Principals and Synthetic Students: The Next Wave of School Cyber Threats represents a fundamental shift in how malicious actors can target our educational communities. We've seen how AI-generated content can destroy careers, traumatize students, and shake the foundation of trust that schools depend on.

Yet, schools that act proactively are already turning the tide. They are building defenses through education, updating their policies, and creating cultures where everyone knows how to spot and respond to these threats.

The key insight is this—education is our strongest weapon. When staff know the warning signs of a deepfake voice, they can stop fraud. When students understand the impact of creating synthetic images, they make better choices. When entire school communities are digitally literate, they become incredibly difficult targets.

But education alone isn't enough. We need robust policies, support systems for victims, and proactive defense strategies. The schools successfully navigating this challenge have invested in comprehensive cybersecurity training that evolves with the threat landscape.

At CyberNut, we've designed our training specifically for K-12 schools facing these emerging threats. Our gamified, low-touch approach helps busy educators learn to spot everything from traditional phishing to AI-powered voice cloning attempts—without adding to their already full plates.

Ready to see where your school stands? Get a free phishing audit to understand your current vulnerabilities and find how prepared your staff really is for these evolving threats. Visit https://www.cybernut.com/phishing-audit.

The next wave of cyber threats is here, but with the right preparation, your school community can weather any storm. Learn more about how to protect your school community with training that actually works.

Oliver Page

Some more Insigths

Back