Oliver Page

Case study

September 10, 2025

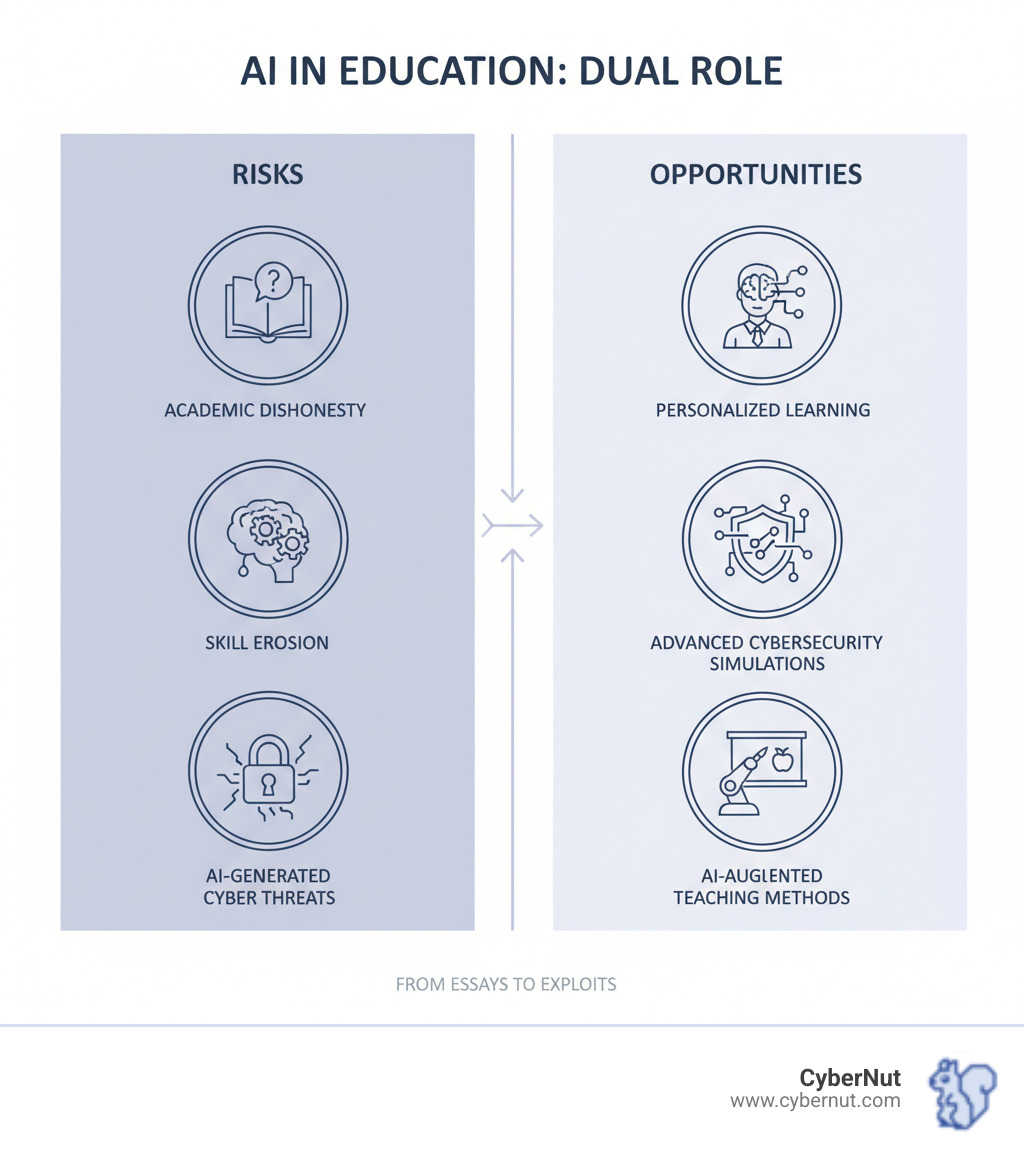

From Essays to Exploits: What AI Means for Cybersecurity in the Classroom represents a dual change happening in schools today. AI tools like ChatGPT aren't just changing how students complete assignments - they're fundamentally altering the cybersecurity threat landscape that educational institutions face.

Quick Overview: AI's Dual Impact on Education

The challenge is clear: AI has lowered the barrier for both academic shortcuts and cyber threats. While students use AI to generate essays in seconds, that same technology can create convincing phishing campaigns targeting school networks.

The stakes are rising fast. Ransomware attacks on schools increased 70% in 2023, and phishing accounts for 30% of all cyber incidents. Meanwhile, educators report that students now invest as little as 5 hours per semester on coursework due to AI reliance - down from the traditional 96 hours.

This isn't just about catching cheaters anymore. It's about preparing students for a world where AI will be essential for network defense, while ensuring they don't become vulnerable to AI-powered attacks targeting their schools today.

The quiet hum of pencils on paper has been replaced by the soft clicking of keyboards and the glow of AI chatbots. From Essays to Exploits: What AI Means for Cybersecurity in the Classroom starts with a simple truth: the traditional classroom is gone forever. What used to take students hours of research, drafting, and revision can now happen in minutes with a well-crafted prompt.

This isn't just a minor shift in how homework gets done. It's a complete change of what education looks like, forcing teachers and administrators to question everything they thought they knew about learning and assessment.

Remember the five-paragraph essay? The carefully researched term paper? These academic staples are quickly becoming as outdated as overhead projectors. The reason is simple: AI tools can generate them faster than most students can type.

The numbers tell the story clearly. 43% of students use AI tools for their schoolwork. That's nearly half of all students turning to artificial intelligence to complete assignments that were designed to test their own knowledge and thinking skills.

But here's the real problem: when students rely on AI to do their thinking, they're not actually learning. Some studies suggest students now spend as little as five hours per semester on coursework, compared to the traditional expectation of 96 hours. That's not efficiency - that's educational shortcutting that leaves students unprepared for real-world challenges.

Take-home essays have become meaningless when students can generate a complete paper in minutes. Teachers are scrambling to adapt, moving toward in-class writing assignments where AI access can be controlled. Others are embracing verbal assessments that require students to explain their thinking out loud, making it impossible to hide behind AI-generated content.

The shift is dramatic and necessary. As one English teacher put it bluntly: "The cheating is off the charts. It's the worst I've seen in my entire career." Any assignment that goes home must now be assumed to have AI involvement.

Here's where things get really tricky. What exactly counts as cheating when it comes to AI? Is it wrong to ask ChatGPT to check your grammar? What about using it to brainstorm ideas? The line between helpful tool and academic dishonesty has become frustratingly blurry.

Different teachers have different rules. Different schools have different policies. Students are genuinely confused about what's allowed and what isn't. This inconsistency creates a perfect storm of student confusion and enforcement nightmares.

AI detectors seemed like the answer at first, but they quickly proved problematic. These tools produced so many false positives that many schools simply gave up using them. Imagine being accused of cheating when you actually wrote your paper by hand - it happened more often than anyone expected.

The reality is that proving AI misuse is incredibly difficult. The technology evolves faster than detection methods can keep up. By the time schools figure out how to catch one type of AI writing, students have moved on to newer, more sophisticated tools.

Smart schools are changing their approach entirely. Instead of playing an endless game of technological cat-and-mouse, they're focusing on setting clear expectations through detailed syllabus guidelines. They're teaching students about AI literacy - how to use these tools responsibly rather than as replacements for their own thinking.

The conversation has shifted from "How do we stop AI?" to "How do we teach students to use AI ethically?" This represents a fundamental change in how education approaches technology - not as an enemy to be defeated, but as a tool that requires wisdom to use well.

For schools navigating these choppy waters, understanding the balance between innovation and integrity is crucial. Learn more about AI in the Classroom: Balancing Innovation with Cybersecurity to see how successful institutions are managing this transition.

The same AI that helps students write essays can also create sophisticated cyber weapons. This isn't science fiction – it's happening right now in classrooms around the world. From Essays to Exploits: What AI Means for Cybersecurity in the Classroom represents a sobering reality where the line between academic assistance and malicious activity has become dangerously thin.

Think about it this way: if AI can write a convincing college application essay, it can certainly write a convincing phishing email. The technology doesn't care about intent – it simply responds to prompts. This dual nature makes AI both a powerful educational tool and a serious security concern for schools everywhere.

Here's where things get concerning. The same generative AI tools students use for homework can create genuine cyber threats with minimal effort. A curious student might start by asking an AI chatbot something seemingly innocent like "write a professional-looking email asking for login credentials." Before they know it, they've created their first phishing template.

The scary part? The AI doesn't ask questions about why you need that email. It doesn't consider the ethics. It just delivers exactly what you requested, often with impressive quality and attention to detail.

We've seen students experiment with jailbreaking AI systems to bypass safety restrictions. Others have finded how to generate basic malware scripts or create deepfake content for social engineering attacks. What starts as curiosity can quickly escalate into something much more serious.

The barrier to entry for cybercrime has never been lower. Students no longer need years of coding experience to create convincing phishing email campaigns or write malicious code. They just need to know how to ask the right questions. This shift fundamentally changes the threat landscape schools face every day.

For a deeper look at these evolving dangers, check out our analysis of AI-Powered Attacks and Deepfakes.

Schools were already attractive targets for cybercriminals, but AI has made the situation much worse. Educational institutions hold valuable data – student records, financial information, research data – while often operating with limited IT budgets and resources.

The numbers tell a stark story. Ransomware attacks jumped 70% in 2023, with schools bearing a significant portion of that increase. These aren't random attacks either. Criminals are using AI to identify vulnerabilities, craft personalized attack messages, and automate their assault on school networks.

K-12 institutions face unique challenges because they're dealing with tech-savvy students who might inadvertently (or intentionally) become insider threats. When students can use AI to create sophisticated attacks, the traditional security perimeter becomes meaningless.

AI-boosted threats are particularly dangerous because they're harder to detect. Traditional security training teaches people to look for poor grammar or obvious scams. But AI-generated phishing emails often have perfect grammar, relevant context, and convincing details that make them nearly indistinguishable from legitimate communications.

Schools need to completely rethink their defense strategies. Verification drills and updated security protocols are essential, but many institutions remain unprepared for this new reality. Our research shows that most schools lack the resources and expertise to handle AI-improved cyber threats effectively.

This growing crisis demands immediate attention. As we've detailed in Schools Unprepared for AI Cyber Threats: A Growing Crisis in Education, the gap between evolving threats and school preparedness continues to widen.

The solution isn't to ban AI – that's neither practical nor beneficial. Instead, schools must accept AI-aware security training that prepares students and staff for this new landscape. The same technology creating these threats can also be our most powerful defense tool when used correctly.

Here's where things get exciting. While AI creates new challenges for academic integrity and cybersecurity, it also opens incredible doors for training tomorrow's cyber defenders. From Essays to Exploits: What AI Means for Cybersecurity in the Classroom isn't just about managing threats - it's about changing how we prepare students for cybersecurity careers.

The key shift? Stop trying to keep AI out of the classroom and start teaching students to master it as a defensive weapon.

Traditional cybersecurity courses face a brutal challenge: the threat landscape changes faster than textbooks can be updated. By the time a curriculum committee approves new content about the latest ransomware variant, three new attack methods have already emerged.

AI solves this problem beautifully. Imagine course materials that update themselves with the latest threat intelligence, or AI tutors that adapt explanations to each student's background. A business student learning about SQL injection gets examples using retail databases, while a computer science student sees the technical code behind the attack.

The time savings are remarkable too. Instructors using AI for exam creation and grading report cutting their workload from 30 hours per exam cycle to just 15 hours. That freed-up time? It goes straight into hands-on mentoring and practical skill development - exactly what cybersecurity students need most.

AI also keeps content fresh and relevant. Instead of teaching students about last year's phishing techniques, AI-powered curriculum can incorporate threats that emerged just days ago. Students learn to defend against the attacks they'll actually face in their careers, not outdated examples from static textbooks.

The goal isn't to replace human instructors but to amplify their impact. When AI handles the routine tasks, teachers can focus on what they do best: building critical thinking skills and real-world judgment. For more on teaching students to use AI tools safely, check out Digital Literacy: Cyber Literacy - Teaching Students to Steer AI Tools Safely.

The best cybersecurity training happens when students get their hands dirty - safely. AI creates learning environments that were impossible just a few years ago, where students can practice defending against sophisticated attacks without risking real systems.

Picture this: students face off against AI-powered attack simulations that adapt in real-time. The AI notices a student struggling with network defense concepts, so it adjusts the difficulty and provides targeted hints. Another student breezing through basic scenarios gets hit with advanced persistent threat simulations that would challenge seasoned professionals.

Capture The Flag (CTF) competitions become incredibly realistic when AI generates dynamic challenges. Instead of solving the same static puzzles every semester, students encounter fresh scenarios that mirror current threat landscapes. One week they're defending against AI-generated phishing campaigns, the next they're analyzing malware that uses machine learning to evade detection.

The controversial techniques become teaching tools too. Students learn AI jailbreaking methods like DAN and CHARACTER Play - not to cause trouble, but to understand how attackers might manipulate AI systems. It's like teaching lock-picking to security guards: you need to know how the bad guys think to defend effectively.

Even vulnerability scanning and incident response training benefit from AI simulation. Students practice coordinating responses to data breaches, complete with realistic pressure and time constraints. The AI can simulate panicked executives, confused users, and media pressure - preparing students for the human side of cybersecurity incidents.

Our AI Phishing Simulator (Students) demonstrates this approach in action, creating realistic phishing scenarios that help students recognize and respond to next-generation social engineering attacks.

These simulated environments build the practical, hands-on skills that cybersecurity employers desperately need. Students graduate with experience defending against the latest AI-powered attacks, not just theoretical knowledge from outdated case studies.

The allure of AI in education is undeniable, offering shortcuts and efficiencies. But what are the long-term consequences for our students and the professional world they will enter? The journey From Essays to Exploits: What AI Means for Cybersecurity in the Classroom culminates in a crucial question about the preparedness of our future workforce.

Picture this: a student breezes through four years of cybersecurity courses, AI handling most of their assignments. They graduate with excellent grades but freeze when faced with their first real network breach. This scenario isn't science fiction – it's becoming reality.

Skill erosion is the hidden danger lurking behind AI's convenience. When students consistently rely on AI to bypass foundational learning, we risk creating graduates who look great on paper but crumble under pressure. In cybersecurity, this isn't just about poor job performance – it's about national security, critical infrastructure, and protecting our most vulnerable institutions.

The numbers are sobering. Students who over-rely on AI often face disproportionate failure rates in advanced courses or real-world scenarios that AI cannot solve. Without substantial reforms in how we assess learning, academic degrees risk becoming meaningless certificates rather than proof of genuine competence.

The deeper concern goes beyond individual career prospects. In cybersecurity, superficial knowledge can be dangerous. A graduate who doesn't truly understand network protocols might miss critical vulnerabilities. Someone who relied on AI for threat analysis might fail to recognize sophisticated attack patterns. These gaps don't just hurt the individual – they compromise entire organizations.

The job market is already adapting to this new reality. Some employers now use pen-and-paper tests during interviews, specifically to verify that candidates actually know what their resumes claim. It's a telling sign when industries feel they can't trust traditional academic credentials.

But here's the interesting twist: employers aren't looking for people who avoid AI. They want professionals who can work alongside AI effectively while maintaining critical thinking skills. The ideal cybersecurity professional of tomorrow isn't someone who fears AI – they're someone who can harness its power while recognizing its limits.

AI management and oversight tops the list of desired skills. This means knowing how to deploy, configure, and maintain AI-powered security tools without becoming dependent on them. It's about being the conductor of an AI orchestra, not just another instrument.

Prompt engineering has emerged as a surprisingly valuable skill. The ability to craft effective prompts that extract accurate, relevant information from AI systems is becoming as important as knowing how to use a search engine was twenty years ago.

Ethical judgment separates good cybersecurity professionals from great ones. AI can analyze threats and suggest responses, but it takes human wisdom to steer privacy concerns, understand bias in AI recommendations, and make decisions that balance security with user rights.

Most importantly, employers want professionals with critical evaluation skills. This means spotting AI "hallucinations," verifying information from trusted sources, and knowing when to trust AI recommendations versus when to dig deeper. It's about being skeptical in the best possible way.

The future belongs to adaptable learners who can continuously evolve with rapidly changing AI technologies and cyber threats. As we explore in Preparing School IT Teams for the AI-Infused Threat Landscape, the goal isn't to create AI-free zones but to cultivate deep, meaningful learning that goes far beyond superficial AI-assisted success.

The question isn't whether AI will reshape cybersecurity careers – it already has. The question is whether we're preparing students to thrive in this new landscape or simply survive it.

Let's address the burning questions that keep educators, IT administrators, and parents awake at night. From Essays to Exploits: What AI Means for Cybersecurity in the Classroom raises complex issues that demand clear, practical answers.

Here's the reality check: simply banning AI is like trying to hold back the tide with a paper towel. It's not going to work, and you'll just end up frustrated and soggy. The smart money is on redesigning how we assess students rather than playing an endless game of digital whack-a-mole.

The winning strategies focus on making AI less useful for cheating. In-class assignments are making a comeback because it's hard to sneak ChatGPT into a supervised exam room. Verbal presentations are golden - AI can write an essay, but it can't stand up and defend ideas under questioning from a sharp teacher.

Project-based learning is where things get interesting. When students tackle real-world problems that require original thinking and creative solutions, AI becomes less of a crutch and more of what it should be - a research assistant. Think of assignments that require students to interview community members, analyze local data, or create something genuinely unique to their experience.

The game-changer is teaching AI as a tool rather than treating it like contraband. When students understand how to cite AI assistance, recognize its limitations, and know when it's appropriate to use, they develop the critical thinking skills that make them valuable in the real world. It's about shifting from a "gotcha" mentality to fostering genuine learning.

Absolutely, and it should be - but with the right guardrails in place. Teaching students about offensive security isn't about creating digital troublemakers. It's about understanding how attackers think so our future defenders can stay one step ahead.

The magic happens in controlled, simulated environments - think of them as cybersecurity playgrounds where students can safely explore the dark side without causing real damage. These "walled garden" labs let students practice penetration testing, experiment with vulnerability findy, and understand exploit development without putting anyone at risk.

AI-powered vulnerability scanning teaches students how attackers might automate their reconnaissance. Students learn to spot weaknesses in code and systems, but more importantly, they learn how to patch those holes. When students design phishing campaigns in training scenarios, they're not learning to be scammers - they're learning to recognize the psychological tricks that make these attacks so effective.

The key is strict ethical guidelines and constant reinforcement that these skills exist to protect, not to harm. Every exercise should be framed within the context of defense, with clear boundaries about what's acceptable and what crosses the line.

While knowing how to operate AI tools matters, the real superpower is critical evaluation and human judgment. Think of AI as a brilliant intern who's incredibly fast but sometimes confidently wrong about everything.

The best cybersecurity professionals of tomorrow will be masters at validating AI output. They'll independently verify recommendations, double-check generated code, and never take AI's word as gospel. They understand that AI can "hallucinate" - producing convincing but completely incorrect information - and they've trained themselves to spot these digital mirages.

Understanding AI limitations separates the pros from the amateurs. Great cybersecurity professionals know when AI is the right tool for the job and when they need to roll up their sleeves and dig deeper themselves. They can identify bias in AI models that might create blind spots in security coverage.

Most importantly, they apply strong ethical reasoning to AI-driven decisions. They understand the implications of automated security responses, respect privacy concerns, and ensure that efficiency never comes at the cost of fairness or safety.

The future belongs to professionals who can strategically integrate AI into their work - using it to augment their capabilities while maintaining human oversight and judgment. They're not trying to replace themselves with robots; they're becoming more effective humans with really powerful digital assistants.

The change From Essays to Exploits: What AI Means for Cybersecurity in the Classroom represents more than just another tech trend - it's a fundamental shift that demands immediate attention from educators and administrators alike.

We've witnessed how AI has turned traditional academic assessments upside down, with nearly half of students now using these tools for schoolwork. But the implications stretch far beyond the classroom. The same technology that can write a convincing essay in seconds can also craft sophisticated phishing emails or generate malicious code with minimal technical knowledge.

This isn't about going back to the old ways. The AI genie is out of the bottle, and trying to stuff it back in won't work. Instead, schools need to accept this new reality and use it to their advantage.

The most successful educational institutions will be those that stop fighting AI and start teaching with it. This means redesigning assessments to be AI-resistant while simultaneously using AI to create more engaging, personalized learning experiences. It means building cybersecurity curricula that prepare students for a world where AI will be both their most powerful tool and their greatest threat.

The stakes couldn't be higher. With ransomware attacks on schools up 70% and AI making it easier than ever to create convincing cyber threats, we need a new generation of defenders who understand both the promise and peril of artificial intelligence. These future cybersecurity professionals must be able to manage AI systems, think critically about AI output, and apply human judgment where technology falls short.

CyberNut's specialized training programs are designed specifically to help K-12 institutions steer this complex landscape. Our automated, gamified approach makes cybersecurity training engaging for students while building the human firewall schools desperately need against AI-powered attacks.

The future belongs to students who can work alongside AI while maintaining their critical thinking skills. By integrating AI-aware training and simulations into our curricula, we can equip them with the knowledge to steer and secure an increasingly automated world.

Ready to see how vulnerable your school is to AI-powered phishing? Get a free phishing audit today.

Find out how our automated training platform can build a stronger human firewall in your district. Explore the CyberNut platform.

Oliver Page

Some more Insigths

Back